안녕하세요. ManVSCloud 김수현입니다.

네이버 클라우드의 Storage 상품 중 Block Storage와 NAS의 I/O 성능 테스트를 진행해보고자 합니다.

Object Storage는 s3fs, goofys, rclone 등 Mount에 사용하는 도구에 따라 성능이 달라지므로 제외하였습니다.

Block Storage 중 SSD는 용량에 따라 IOPS가 달라지고 확인할 수 있습니다.

(10~100GB는 4,000 IOPS 고정이며 이후 1GB 씩 늘어날 때마다 40 IOPS 씩 추가 및 최대 20,000 IOPS(4KiB I/O)까지 제공)

오늘은 SSD Storage의 IOPS가 정확한지 HDD와 NAS는 어느 정도의 성능이 나올지 확인해볼 것입니다.

Preparation process and tools

Block Storage의 파일시스템은 xfs, NAS의 nfsvers는 3 버전으로 진행된 점 참고 부탁드립니다.

programs when I wanted to test a specific workload, either for performance

reasons or to find/reproduce a bug. The process of writing such a test app can

be tiresome, especially if you have to do it often. Hence I needed…

스토리지 성능 테스트에 사용될 도구는 FIO 입니다.

FIO는 스토리지 벤치마크 및 드라이브 테스트를 위한 스크립터블 I/O 도구입니다.

아래 명령어는 Rocky Linux 8.6에서 FIO 설치 시 사용된 명령어입니다.

cd /usr/local/src wget https://codeload.github.com/axboe/fio/tar.gz/fio-3.33 mv fio-3.33 fio-3.33.tar.gz tar zxvf fio-3.33.tar.gz cd fio-fio-3.33/ ./configure --prefix=/usr/local/fio ln -s /usr/local/fio/bin/fio /usr/bin/fio

마지막으로 오늘 테스트 시 진행될 Block Storage와 NAS를 500GB씩 동일하게 준비하고 mount를 진행하였습니다.

[root@iotest-srv mnt]# df -h /mnt/* Filesystem Size Used Avail Use% Mounted on /dev/xvdb1 500G 33M 500G 1% /mnt/hdd 169.254.84.60:/n2594954_iostest 500G 320K 500G 1% /mnt/nas /dev/xvdc1 500G 33M 500G 1% /mnt/ssd

RandRead

먼저 I/O 패턴의 유형을 randread(무작위 읽기), 블록 크기는 4k, Direct I/O, 파일 크기는 1G로 설정하고 진행하였습니다.

※ Block Storage – HDD (500GB)

HDD 500GB의 테스트 결과입니다.

read: IOPS=806(avg 기준), BW=3226KiB/s (3304kB/s)(567MiB/180044msec)

[root@iotest-srv ~]# fio --directory=/mnt/hdd --name hdd-test --direct=1 \

> --rw=randread --bs=4k --size=1G --numjobs=10 --time_based \

> --runtime=180 --group_reporting --norandommap

hdd-test: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

hdd-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [r(10)][100.0%][r=7871KiB/s][r=1967 IOPS][eta 00m:00s]

hdd-test: (groupid=0, jobs=10): err= 0: pid=7605: Thu Dec 22 16:39:27 2022

read: IOPS=806, BW=3226KiB/s (3304kB/s)(567MiB/180044msec)

clat (usec): min=230, max=116766, avg=12390.36, stdev=17019.62

lat (usec): min=230, max=116767, avg=12391.64, stdev=17019.68

clat percentiles (usec):

| 1.00th=[ 265], 5.00th=[ 306], 10.00th=[ 570], 20.00th=[ 709],

| 30.00th=[ 791], 40.00th=[ 1385], 50.00th=[ 5145], 60.00th=[ 7439],

| 70.00th=[10683], 80.00th=[21890], 90.00th=[43779], 95.00th=[52691],

| 99.00th=[60556], 99.50th=[62129], 99.90th=[68682], 99.95th=[71828],

| 99.99th=[82314]

bw ( KiB/s): min= 872, max=25140, per=99.87%, avg=3222.25, stdev=405.89, samples=3590

iops : min= 218, max= 6285, avg=805.54, stdev=101.47, samples=3590

lat (usec) : 250=0.15%, 500=8.62%, 750=16.63%, 1000=12.62%

lat (msec) : 2=3.20%, 4=4.84%, 10=21.99%, 20=10.44%, 50=14.94%

lat (msec) : 100=6.56%, 250=0.01%

cpu : usr=0.06%, sys=0.15%, ctx=145228, majf=0, minf=113

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=145210,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=3226KiB/s (3304kB/s), 3226KiB/s-3226KiB/s (3304kB/s-3304kB/s), io=567MiB (595MB), run=180044-180044msec

Disk stats (read/write):

xvdb: ios=145223/9, merge=0/3, ticks=1796156/543, in_queue=1796698, util=100.00%

※ Block Storage – SSD (500GB)

SSD 500GB의 테스트 결과입니다.

read: IOPS=19.8k(avg 기준), BW=77.3MiB/s (81.0MB/s)(13.6GiB/180002msec)

[root@iotest-srv ~]# fio --directory=/mnt/ssd --name ssd-test --direct=1 --rw=randread --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

ssd-test: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

ssd-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [r(10)][100.0%][r=77.8MiB/s][r=19.9k IOPS][eta 00m:00s]

ssd-test: (groupid=0, jobs=10): err= 0: pid=7849: Thu Dec 22 16:45:53 2022

read: IOPS=19.8k, BW=77.3MiB/s (81.0MB/s)(13.6GiB/180002msec)

clat (usec): min=205, max=41639, avg=500.10, stdev=570.35

lat (usec): min=206, max=41640, avg=501.37, stdev=570.34

clat percentiles (usec):

| 1.00th=[ 310], 5.00th=[ 351], 10.00th=[ 371], 20.00th=[ 404],

| 30.00th=[ 429], 40.00th=[ 449], 50.00th=[ 474], 60.00th=[ 494],

| 70.00th=[ 519], 80.00th=[ 553], 90.00th=[ 603], 95.00th=[ 660],

| 99.00th=[ 840], 99.50th=[ 1012], 99.90th=[ 3130], 99.95th=[10159],

| 99.99th=[32637]

bw ( KiB/s): min=51700, max=87202, per=100.00%, avg=79192.18, stdev=525.80, samples=3590

iops : min=12920, max=21800, avg=19797.29, stdev=131.49, samples=3590

lat (usec) : 250=0.01%, 500=62.46%, 750=35.67%, 1000=1.34%

lat (msec) : 2=0.37%, 4=0.06%, 10=0.03%, 20=0.03%, 50=0.03%

cpu : usr=0.93%, sys=2.54%, ctx=3561562, majf=0, minf=118

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=3560365,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=77.3MiB/s (81.0MB/s), 77.3MiB/s-77.3MiB/s (81.0MB/s-81.0MB/s), io=13.6GiB (14.6GB), run=180002-180002msec

Disk stats (read/write):

xvdc: ios=3556956/9, merge=0/3, ticks=1716711/8, in_queue=1716719, util=100.00%

※ NAS (500GB)

NAS 500GB의 테스트 결과입니다.

read: IOPS=3007(avg 기준), BW=11.7MiB/s (12.3MB/s)(2115MiB/180009msec)

[root@iotest-srv ~]# fio --directory=/mnt/nas --name nas-test --direct=1 --rw=randread --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

nas-test: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

nas-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [r(10)][100.0%][r=11.7MiB/s][r=2998 IOPS][eta 00m:00s]

nas-test: (groupid=0, jobs=10): err= 0: pid=8039: Thu Dec 22 16:51:12 2022

read: IOPS=3007, BW=11.7MiB/s (12.3MB/s)(2115MiB/180009msec)

clat (usec): min=264, max=421511, avg=3318.10, stdev=4991.56

lat (usec): min=264, max=421512, avg=3319.49, stdev=4991.55

clat percentiles (usec):

| 1.00th=[ 326], 5.00th=[ 359], 10.00th=[ 383], 20.00th=[ 424],

| 30.00th=[ 457], 40.00th=[ 494], 50.00th=[ 537], 60.00th=[ 594],

| 70.00th=[ 750], 80.00th=[ 9896], 90.00th=[10290], 95.00th=[10552],

| 99.00th=[19792], 99.50th=[20579], 99.90th=[34341], 99.95th=[48497],

| 99.99th=[62653]

bw ( KiB/s): min= 8860, max=25375, per=100.00%, avg=12037.42, stdev=155.30, samples=3590

iops : min= 2214, max= 6342, avg=3008.65, stdev=38.82, samples=3590

lat (usec) : 500=42.07%, 750=28.04%, 1000=1.91%

lat (msec) : 2=0.29%, 4=0.31%, 10=8.84%, 20=17.72%, 50=0.77%

lat (msec) : 100=0.04%, 250=0.01%, 500=0.01%

cpu : usr=0.16%, sys=0.35%, ctx=549639, majf=0, minf=103

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=541466,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=11.7MiB/s (12.3MB/s), 11.7MiB/s-11.7MiB/s (12.3MB/s-12.3MB/s), io=2115MiB (2218MB), run=180009-180009msec

RandWrite

이번에는 나머지 옵션을 동일하게 두고 I/O 패턴의 유형을 randwrite(무작위 쓰기)로 설정하여 진행하였습니다.

※ Block Storage – HDD (500GB)

HDD 500GB의 테스트 결과입니다.

write: IOPS=664(avg 기준), BW=2657KiB/s (2720kB/s)(467MiB/180009msec); 0 zone resets

fio --directory=/mnt/hdd --name hdd-write-test --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

hdd-write-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

hdd-write-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [w(10)][100.0%][w=2722KiB/s][w=680 IOPS][eta 00m:00s]

hdd-write-test: (groupid=0, jobs=10): err= 0: pid=8232: Thu Dec 22 16:55:39 2022

write: IOPS=664, BW=2657KiB/s (2720kB/s)(467MiB/180009msec); 0 zone resets

clat (usec): min=423, max=181481, avg=15049.53, stdev=7312.73

lat (usec): min=424, max=181482, avg=15051.01, stdev=7312.70

clat percentiles (usec):

| 1.00th=[ 1319], 5.00th=[ 10683], 10.00th=[ 10945], 20.00th=[ 11207],

| 30.00th=[ 11338], 40.00th=[ 11469], 50.00th=[ 11731], 60.00th=[ 11994],

| 70.00th=[ 21103], 80.00th=[ 21103], 90.00th=[ 21365], 95.00th=[ 21627],

| 99.00th=[ 38011], 99.50th=[ 49546], 99.90th=[ 86508], 99.95th=[104334],

| 99.99th=[139461]

bw ( KiB/s): min= 1240, max= 4088, per=100.00%, avg=2658.54, stdev=22.38, samples=3590

iops : min= 310, max= 1022, avg=664.64, stdev= 5.60, samples=3590

lat (usec) : 500=0.01%, 750=0.04%, 1000=0.10%

lat (msec) : 2=3.07%, 4=0.38%, 10=0.20%, 20=60.94%, 50=34.77%

lat (msec) : 100=0.43%, 250=0.06%

cpu : usr=0.05%, sys=0.23%, ctx=120680, majf=0, minf=100

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,119559,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=2657KiB/s (2720kB/s), 2657KiB/s-2657KiB/s (2720kB/s-2720kB/s), io=467MiB (490MB), run=180009-180009msec

Disk stats (read/write):

xvdb: ios=76/120067, merge=0/2, ticks=408/1813128, in_queue=1813536, util=99.98%

※ Block Storage – SSD (500GB)

SSD 500GB의 테스트 결과입니다.

write: IOPS=7090(avg 기준), BW=27.7MiB/s (29.0MB/s)(4986MiB/180003msec); 0 zone resets

[root@iotest-srv ~]# fio --directory=/mnt/ssd --name ssd-write-test --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

ssd-write-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

ssd-write-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [w(10)][100.0%][w=30.8MiB/s][w=7887 IOPS][eta 00m:00s]

ssd-write-test: (groupid=0, jobs=10): err= 0: pid=8367: Thu Dec 22 16:59:28 2022

write: IOPS=7090, BW=27.7MiB/s (29.0MB/s)(4986MiB/180003msec); 0 zone resets

clat (usec): min=309, max=41412, avg=1404.98, stdev=1048.10

lat (usec): min=310, max=41413, avg=1406.30, stdev=1048.11

clat percentiles (usec):

| 1.00th=[ 758], 5.00th=[ 857], 10.00th=[ 930], 20.00th=[ 1123],

| 30.00th=[ 1237], 40.00th=[ 1303], 50.00th=[ 1352], 60.00th=[ 1401],

| 70.00th=[ 1450], 80.00th=[ 1516], 90.00th=[ 1631], 95.00th=[ 1745],

| 99.00th=[ 3621], 99.50th=[ 6063], 99.90th=[11600], 99.95th=[24511],

| 99.99th=[38536]

bw ( KiB/s): min= 6984, max=32814, per=100.00%, avg=28381.93, stdev=346.96, samples=3590

iops : min= 1746, max= 8203, avg=7095.35, stdev=86.73, samples=3590

lat (usec) : 500=0.06%, 750=0.86%, 1000=13.19%

lat (msec) : 2=83.41%, 4=1.54%, 10=0.53%, 20=0.36%, 50=0.06%

cpu : usr=0.38%, sys=1.73%, ctx=1282669, majf=0, minf=102

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1276356,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=27.7MiB/s (29.0MB/s), 27.7MiB/s-27.7MiB/s (29.0MB/s-29.0MB/s), io=4986MiB (5228MB), run=180003-180003msec

Disk stats (read/write):

xvdc: ios=76/1278783, merge=0/0, ticks=91/1780644, in_queue=1780735, util=100.00%

※ NAS (500GB)

NAS 500GB의 테스트 결과입니다.

write: IOPS=3007(avg 기준), BW=11.7MiB/s (12.3MB/s)(2115MiB/180010msec); 0 zone resets

[root@iotest-srv ~]# fio --directory=/mnt/nas --name nas-write-test --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

nas-write-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

nas-write-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [w(10)][100.0%][w=11.8MiB/s][w=3017 IOPS][eta 00m:00s]

nas-write-test: (groupid=0, jobs=10): err= 0: pid=8522: Thu Dec 22 17:03:23 2022

write: IOPS=3007, BW=11.7MiB/s (12.3MB/s)(2115MiB/180010msec); 0 zone resets

clat (usec): min=310, max=49414, avg=3317.67, stdev=4466.56

lat (usec): min=311, max=49415, avg=3319.31, stdev=4466.51

clat percentiles (usec):

| 1.00th=[ 392], 5.00th=[ 433], 10.00th=[ 461], 20.00th=[ 506],

| 30.00th=[ 545], 40.00th=[ 586], 50.00th=[ 627], 60.00th=[ 685],

| 70.00th=[ 848], 80.00th=[10159], 90.00th=[10421], 95.00th=[10552],

| 99.00th=[10814], 99.50th=[10945], 99.90th=[20317], 99.95th=[20579],

| 99.99th=[42206]

bw ( KiB/s): min=10288, max=23820, per=100.00%, avg=12039.65, stdev=107.01, samples=3590

iops : min= 2572, max= 5955, avg=3009.91, stdev=26.75, samples=3590

lat (usec) : 500=18.69%, 750=47.48%, 1000=5.40%

lat (msec) : 2=0.49%, 4=0.10%, 10=4.99%, 20=22.71%, 50=0.16%

cpu : usr=0.18%, sys=0.36%, ctx=550441, majf=0, minf=91

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,541467,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=11.7MiB/s (12.3MB/s), 11.7MiB/s-11.7MiB/s (12.3MB/s-12.3MB/s), io=2115MiB (2218MB), run=180010-180010msec

NAS Storage Additional Checks

NAS Storage는 최소 용량이 500GB이며 최대 10TB (서비스 문의를 통해 그 이상 증가 가능)까지 지원합니다.

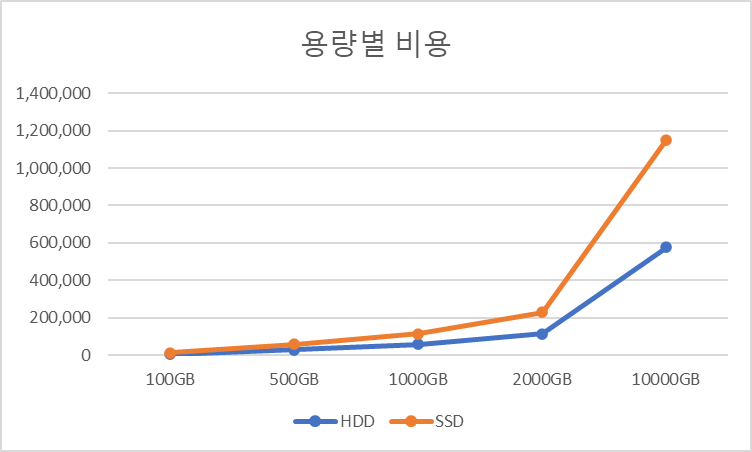

네이버 클라우드의 NAS 상품도 “용량이 늘어날수록 IOPS가 늘어날까?”라는 의문이 생겨 추가 테스트를 진행하였습니다.

※ NAS (3000GB) – READ

NAS 3,000GB의 읽기에 대한 테스트 결과입니다.

read: IOPS=4010(avg 기준), BW=15.7MiB/s (16.4MB/s)(2820MiB/180004msec)

[root@iotest-srv ~]# fio --directory=/mnt/nas --name nas-read-3000 --direct=1 --rw=randread --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

nas-read-3000: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

nas-read-3000: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [r(10)][100.0%][r=15.5MiB/s][r=3975 IOPS][eta 00m:00s]

nas-read-3000: (groupid=0, jobs=10): err= 0: pid=9127: Thu Dec 22 17:18:34 2022

read: IOPS=4010, BW=15.7MiB/s (16.4MB/s)(2820MiB/180004msec)

clat (usec): min=262, max=47989, avg=2487.06, stdev=3939.04

lat (usec): min=263, max=47990, avg=2488.47, stdev=3939.03

clat percentiles (usec):

| 1.00th=[ 330], 5.00th=[ 367], 10.00th=[ 392], 20.00th=[ 424],

| 30.00th=[ 457], 40.00th=[ 490], 50.00th=[ 529], 60.00th=[ 578],

| 70.00th=[ 644], 80.00th=[ 9634], 90.00th=[10159], 95.00th=[10290],

| 99.00th=[10552], 99.50th=[10683], 99.90th=[12387], 99.95th=[20317],

| 99.99th=[36963]

bw ( KiB/s): min=13636, max=31659, per=100.00%, avg=16050.09, stdev=150.93, samples=3590

iops : min= 3408, max= 7912, avg=4011.53, stdev=37.73, samples=3590

lat (usec) : 500=43.54%, 750=33.48%, 1000=2.27%

lat (msec) : 2=0.20%, 4=0.07%, 10=6.84%, 20=13.54%, 50=0.06%

cpu : usr=0.20%, sys=0.46%, ctx=734586, majf=0, minf=103

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=721930,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=15.7MiB/s (16.4MB/s), 15.7MiB/s-15.7MiB/s (16.4MB/s-16.4MB/s), io=2820MiB (2957MB), run=180004-180004msec

※ NAS (3000GB) – WRITE

NAS 3,000GB의 쓰기에 대한 테스트 결과입니다.

write: IOPS=4010(avg 기준), BW=15.7MiB/s (16.4MB/s)(2820MiB/180006msec); 0 zone resets

[root@iotest-srv ~]# fio --directory=/mnt/nas --name nas-write-3000 --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

nas-read-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

nas-read-test: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [w(10)][100.0%][w=15.7MiB/s][w=4031 IOPS][eta 00m:00s]

nas-read-test: (groupid=0, jobs=10): err= 0: pid=8888: Thu Dec 22 17:13:05 2022

write: IOPS=4010, BW=15.7MiB/s (16.4MB/s)(2820MiB/180006msec); 0 zone resets

clat (usec): min=299, max=44819, avg=2486.71, stdev=3889.15

lat (usec): min=299, max=44821, avg=2488.33, stdev=3889.11

clat percentiles (usec):

| 1.00th=[ 379], 5.00th=[ 416], 10.00th=[ 445], 20.00th=[ 486],

| 30.00th=[ 519], 40.00th=[ 553], 50.00th=[ 586], 60.00th=[ 627],

| 70.00th=[ 685], 80.00th=[ 1631], 90.00th=[10159], 95.00th=[10421],

| 99.00th=[10683], 99.50th=[10683], 99.90th=[12649], 99.95th=[20055],

| 99.99th=[37487]

bw ( KiB/s): min=13456, max=31776, per=100.00%, avg=16052.08, stdev=150.91, samples=3590

iops : min= 3364, max= 7944, avg=4013.02, stdev=37.73, samples=3590

lat (usec) : 500=24.26%, 750=51.08%, 1000=4.29%

lat (msec) : 2=0.39%, 4=0.10%, 10=5.96%, 20=13.86%, 50=0.05%

cpu : usr=0.21%, sys=0.49%, ctx=739927, majf=0, minf=98

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,721931,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=15.7MiB/s (16.4MB/s), 15.7MiB/s-15.7MiB/s (16.4MB/s-16.4MB/s), io=2820MiB (2957MB), run=180006-180006msec

※ NAS (6000GB) – WRITE

마지막으로 NAS 6,000GB의 쓰기 테스트를 추가적으로 진행했습니다.

write: IOPS=6016, BW=23.5MiB/s (24.6MB/s)(4230MiB/180006msec); 0 zone resets

[root@iotest-srv ~]# fio --directory=/mnt/nas --name nas-write-6000 --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap

nas-write-6000: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.33

Starting 10 processes

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

nas-write-6000: Laying out IO file (1 file / 1024MiB)

Jobs: 10 (f=10): [w(10)][100.0%][w=23.5MiB/s][w=6023 IOPS][eta 00m:00s]

nas-write-6000: (groupid=0, jobs=10): err= 0: pid=9281: Thu Dec 22 17:22:37 2022

write: IOPS=6016, BW=23.5MiB/s (24.6MB/s)(4230MiB/180006msec); 0 zone resets

clat (usec): min=295, max=50306, avg=1655.53, stdev=3114.73

lat (usec): min=296, max=50306, avg=1657.15, stdev=3114.72

clat percentiles (usec):

| 1.00th=[ 367], 5.00th=[ 404], 10.00th=[ 429], 20.00th=[ 465],

| 30.00th=[ 494], 40.00th=[ 519], 50.00th=[ 545], 60.00th=[ 578],

| 70.00th=[ 619], 80.00th=[ 676], 90.00th=[ 9765], 95.00th=[10159],

| 99.00th=[10552], 99.50th=[10683], 99.90th=[10945], 99.95th=[20055],

| 99.99th=[34341]

bw ( KiB/s): min=18720, max=47821, per=100.00%, avg=24080.97, stdev=252.94, samples=3590

iops : min= 4680, max=11954, avg=6020.24, stdev=63.23, samples=3590

lat (usec) : 500=32.71%, 750=52.43%, 1000=2.97%

lat (msec) : 2=0.30%, 4=0.11%, 10=4.53%, 20=6.89%, 50=0.05%

lat (msec) : 100=0.01%

cpu : usr=0.30%, sys=0.74%, ctx=1122030, majf=0, minf=92

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1083007,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: bw=23.5MiB/s (24.6MB/s), 23.5MiB/s-23.5MiB/s (24.6MB/s-24.6MB/s), io=4230MiB (4436MB), run=180006-180006msec

Result

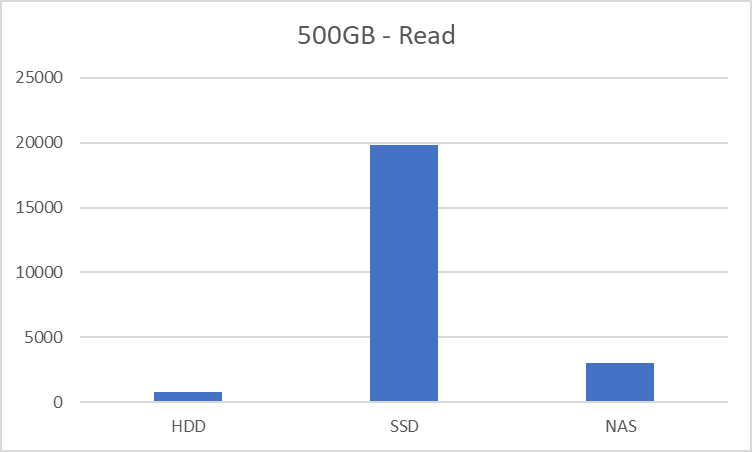

1. HDD, SSD, NAS의 Read 결과 비교 차트입니다.

* SSD : 19,797 iops

* NAS : 3,008 iops

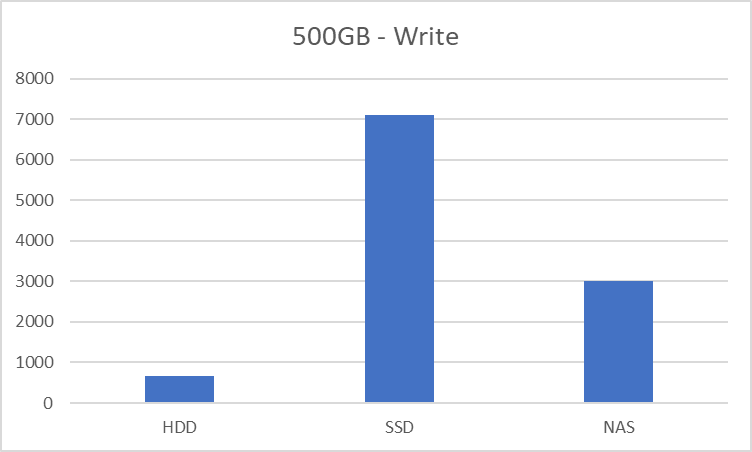

2. HDD, SSD, NAS의 Write 결과 비교 차트입니다.

* SSD : 7,090 iops

* NAS : 3,007 iops

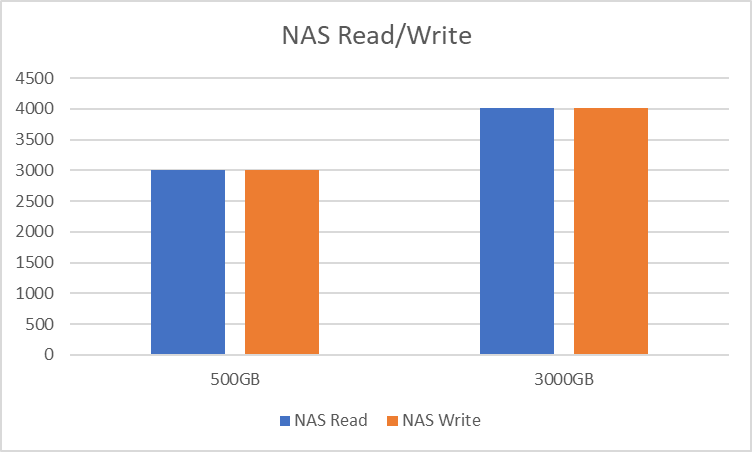

3. NAS 상품의 Read 및 Write의 IOPS 차이

4. NAS 용량별 IOPS 차트입니다.

* 3000GB : 4,010 iops

* 6000GB : 6,016 iops

🔔 결론

- HDD는 저렴하지만 IOPS를 보장하지 않는다.

- SSD는 용량에 따라 4,000 ~ 20,000 IOPS(4KiB I/O)까지 지원되고 Storage 생성 시 바로 수치를 확인할 수 있으니 참고하자.

(IOPS 측정 결과는 Random/Sequential Access Read/Write IOPS 등 다양한 변수에 따라 결과가 달라질 수 있다.) - NAS 상품도 용량이 늘어남에 따라 IOPS가 증가한다.

(하지만 잊지말자. NAS는 공유 스토리지라는 점을…)

Personal Comments

연말인데 다소 일정이 많아 블로그 포스팅할 시간이 빠듯합니다.

하지만 시간내서 네이버 클라우드의 스토리지 상품에 대한 성능 테스트를 진행하고 결과를 공유할 수 있어 뿌듯하네요.

최근에는 네이버 클라우드 플랫폼의 파트너사 엔지니어 모임에도 참가했는데 명함 교환 간에 제 블로그를 많이 보고 참고하신다는 것을 듣고 다시 한 번 보람을 느꼈습니다.

예정되어 있는 재밌는 포스팅이 다소 많아 어서 공유하고 싶네요.

긴 글 읽어주셔서 감사합니다.

No Comments